This information is designed for application and middleware/library developers for Windows* and Linux* operating systems, and for developers in compiled languages (C, C++, etc.). It is also useful for software developers creating solutions in interpreted environments such as JavaScript*, Python*, Ruby*, etc.

Due to the custom nature of this development, think of this information as a guide in helping you do your risk assessment, not a tool for actually doing the assessment itself.

Introduction

In early 2018, security researchers disclosed security vulnerabilities known as “Spectre” (CVE-2017-5753, CVE-2017-5715), which leverage a previously unknown technique using speculative execution, a feature found in most modern CPUs, to observe and collect data that cannot be normally accessed. Because these vulnerabilities gather data by observing the operation of a system, as opposed to finding a weakness in implementation, they are referred to as side-channel attacks.

The following vulnerabilities are included in this analysis:

- Bounds Check Bypass (Variant 1)

- Branch Target Injection (Variant 2)

- Speculative Store Bypass (Variant 4)

Other speculative execution vulnerabilities are outside the scope of this article and therefore are not included in the risk assessment outlined below.

Risk Assessment

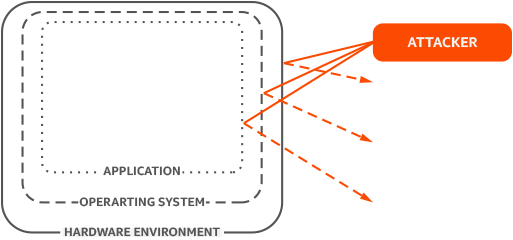

The security of a solution is influenced by its applications, operating system, and hardware environment. You must evaluate all three components to assess overall risk, and subsequently determine whether mitigation needs to be applied. Overall risk is further dependent on what is being protected, the vulnerabilities that exist, and the threat of those vulnerabilities being used against the system.

This Risk Assessment will help you assess and understand the potential exposure to speculative execution vulnerabilities in applications. Where relevant, we call out the roles of the operating system and hardware environment; however, we do not discuss specific risks and mitigations for various operating systems.

We are working with OS vendors on kernel updates to address side channel issues.

Speculative Execution Characteristics

As you read through this article, remember:

- Speculative execution vulnerabilities we’re focused on here can only be used to extract information. These vulnerabilities cannot directly corrupt data, cause crashes, or modify the programmed behavior of software.

- An attacking application must share physical CPU resources with the victim application. These shared resources are used to construct the side-channel.

That means these exploits can only be leveraged by malware running on the same hardware as a target application. That malware may be executed on the hardware by a malicious logged-in user, or with some vulnerabilities, through web content accessed by a non-malicious user’s unmitigated web browser.

Steps to Assessing Risk

- Determine if the application has the potential to contain a secret.

- If the application has a secret, determine the execution environment (including hardware and OS environment) the application runs in.

- If the environment poses a risk, determine the application’s exposure.

- If the application’s exposure merits mitigation, evaluate mitigation options.

The following sections will walk you through the first three steps. Evaluating the mitigation options, including how to apply them, is included in a separate section.

Step 1: Determine if the Application Contains a Secret

The first step in assessing risks related to the speculative execution attacks covered here is determining whether your application contains a secret, which we’ll broadly define as anything you don’t want to be seen or known by others. “Others” could be users, applications, or even other code modules in your application.

Questions to ask yourself:

- Is there a secret in the application, or in a library accessed by the application, that needs to be protected?

- What is the impact if that secret gets out?

Common examples of secrets include an application API key, user password, credit card number, or encryption keys. Information in public databases, wikis and RSS feeds is usually not considered secret -- but again, it depends on what you don’t want others to know.

Because the vulnerabilities we’re dealing with here can only be used to leak information, there’s no need to apply mitigations to applications that do not contain secrets.

Relationships between Applications and Secrets

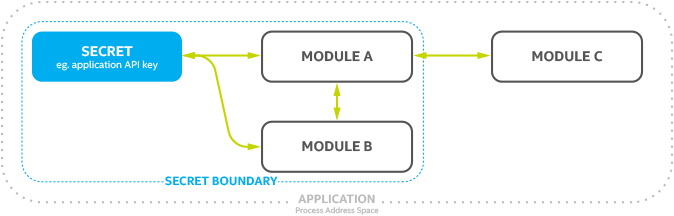

This diagram shows conceptual relationships between a secret and various modules of an application:

In this example, an application API key is the secret. A module is any logical grouping of software; it could be a library, a function, etc. Some modules in the application (Module A and Module B) need the API key to perform work. However, Module C, which also runs in the application, does not need to use the secret to perform its work.

The modules that need to use the secret exist within the Secret Boundary. Access to a Secret should be contained by as small a Secret Boundary as possible, with protection mechanisms in place to prevent modules from crossing that boundary.

To provide a real-world scenario, imagine an application that converts a GPS location into a list of restaurants near that location. Using the above diagram, Module C interfaces with users and exposes a public API. Module A and B might be using a third-party service to convert GPS locations to street addresses. Communication with that service requires an API key (the secret).

Module A and Module B work together to create the list of restaurants near the requested GPS location, and provides that to Module C to return to the user.

How Are Secrets Protected?

In many applications, the secret may be protected by using the Principle of Least Privilege with software components isolated into functional units and well-defined interfaces to communicate between components. In addition, each functional unit has privileges configured to limit what it can access.

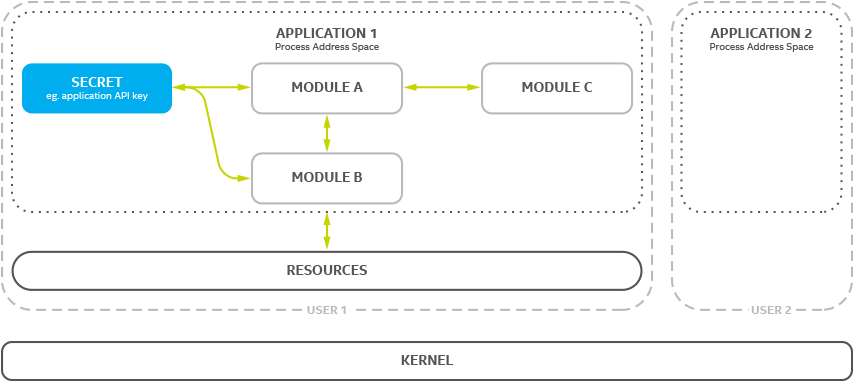

An exploit in one unit is hopefully contained to that unit and the information it has access to. The isolation is typically provided by running functional units as their own user id and configuring resources to only be accessible by that user. The operating system and hardware then prevent applications belonging to other user IDs from accessing the secret.

Protection via Principle of Least Privilege

In this diagram, Application 1 and Application 2 are running as different user IDs. Resources on disk are configured to ensure secrets held on disk by Application 1 can not be accessed by Application 2.

If Application 2 is running on the same physical CPU as GPS Application 1, speculative execution vulnerabilities could allow Application 2 to obtain secrets in Application 1.

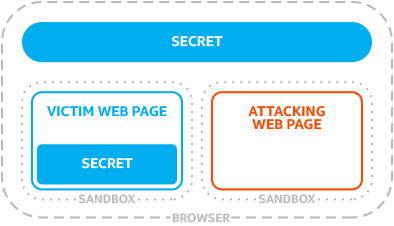

Managed Runtime in Single Address Space

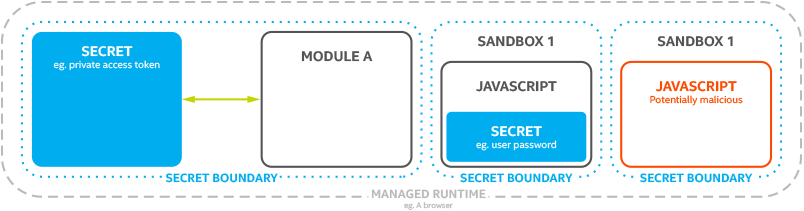

In a managed runtime environment, the “Principle of Least Privilege” might not use operating system capabilities to enforce isolation. For example, a managed runtime may isolate modules of an application using language and software constructs, where all software is still running within a single address space.

In the above illustration, the Managed Runtime simultaneously hosts one or more distinct code payloads (applets, programs, or applications). These applications are logically isolated from each other via some combination of language-based security and runtime services. The strength of the isolation varies depending on the particular managed runtime and how the particular managed runtime is used.

This isolation, called Sandboxing, essentially creates a new Secret Boundary around each software component. In the above example, Sandbox 1 contains a Secret, as does the Managed Runtime environment hosting Module A. Malicious JavaScript in Sandbox 2 should not be able to access a Secret inside either of the other Secret Boundaries.

A Managed Runtime might provide software-based isolation or process separation, which is further enforced via operating system and hardware. More information can be found in the technical documentation for Managed Runtime Mitigations.

If your web application or application runs in a managed runtime, speculative execution vulnerabilities covered here may allow software in another sandbox to gain access to your application’s secrets.

Step 2: Determine the Type of Environment the Application Runs In

Once you have determined your application contains a secret, next identify the environment where your application runs and evaluate how exposed this environment may be to the vulnerabilities covered here.

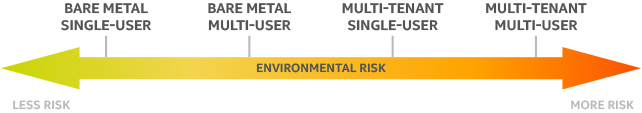

Remember, an attacking application needs to share some physical CPU resources with the target victim application. The easier it is for attacking software to share physical CPU resources with the victim software, the greater the potential exposure to the speculative execution vulnerabilities we’re discussing.

The following calls out five general execution environments where applications are deployed.

Determine the environment where your application is intended to run and then read about the potential exposure to speculative execution vulnerabilities in that environment below.

Multi-tenant, multi-user: Physical hardware is partitioned into multiple environments, where one tenant’s services and data are isolated from others. An individual tenant may allow multiple users to access their environment through shell accounts or other means. This is very common in the public cloud environment.

Bare metal, multi-user: One environment is run on the physical hardware. That environment then allows access by multiple users through shell accounts, remote access, or other capabilities. Uses cases include a university environment, corporate build server where developers can login and perform builds, or a thin-client business deployment model.

Multi-tenant, single-user: Physical hardware is partitioned into multiple environments. Each environment is running a single application or service; access is not provided to multiple external users to install or execute software. Many internet applications provide a pre-configured single-user environment in a container or virtual environment to host single-user applications.

Bare metal, single user (Client): Computers running an operating system for one user. Includes desktops and mobile devices. These typically allow users to install applications into their environment and to browse the web (which may be executing untrusted JavaScript code.)

Bare metal, single user (Appliance): Service appliances running on embedded devices, for example an Internet connected camera or networked-attached storage (NAS) device.

With all of the above, the amount of control you have on the environment can limit the risk of exposure. This is good news for bare metal deployments and private cloud environments where perimeter defenses are expected to protect secrets from malicious actors/code.

Multi-tenant, public cloud environments make it possible for malicious software to run on shared hardware, as each tenant can install their own software (and potentially just-in-time or managed runtimes). Depending on how the environment is managed, tenant applications might share CPU resources with your application.

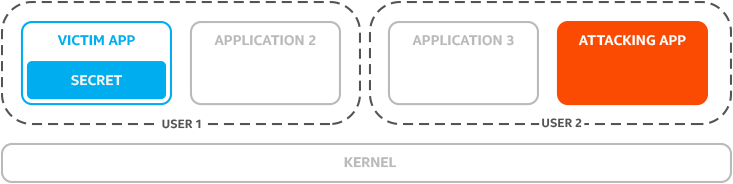

Multi-user vs Single User

In a multi-user environment, a legitimate user may install a malicious application in order to stage an attack.

In the above scenario, User 1 and User 2 are approved users in the environment. If User 1 is running the Victim App, and User 2 wants the secret it contains, User 2 may gain access to that secret via the Attacking App through speculative execution vulnerabilities included in this discussion.

In most multi-user operating system environments, User 2 has access to system information that may lower the difficulty of exploiting speculative execution vulnerabilities. For example, User 2 can determine details about which CPU core the Victim App is physically running on. User 2 can then run the Attacking App such that it runs on that core as well, thereby sharing CPU resources between the two applications.

Questions to ask yourself on a multi-user system:

- How likely is it that a user with access to the environment will attempt to exfiltrate data from another user?

- How likely is it that a user with access to the environment will install or run untrusted software in the environment?

- Many server side package managers pull software from arbitrary sources; a user may unknowingly download and install a malicious component that stages an attack against any user on a multi-user system.

In a single user environment, User 1 would be the only user on the system. That user would need to install software, and run it, to stage an attack against themselves. This may be done unknowingly, for example by accessing a web page that contains malicious JavaScript.

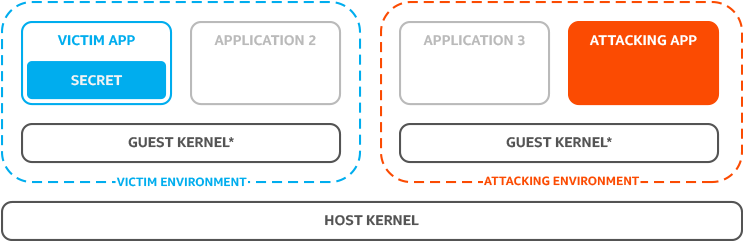

Multi-tenant

In multi-tenant environments, each tenant is protected from other tenants.

Above we have two tenants isolated from each other. One runs in the Victim Environment, and another in the Attacking Environment. There are generally three ways to provide isolation between tenants, listed from lowest level of isolation (greatest risk) to highest (lowest risk):

- Operating system/software based multi-tenant (Docker*, Kubernetes, Openshift*).

- Virtual machine-based multi-tenant (qemu/KVM, xen, Microsoft Hyper-V).

- Every tenant has their own dedicated physical hardware (not illustrated in diagram).

There are multiple challenges to an Attacking App staging an attack against the Victim App, which get more difficult as isolation between applications increases:

The Attacking App needs to share physical resources with the Victim App. This is a significant step as it gives an attacker the ability to influence:

- Which physical platform the Attacking App’s environment runs on.

- Which physical resources the Attacking or Victim Apps are scheduled to use.

The closer the relationship between Victim and Attacker, the higher the risk of attack. Victim Apps may expose interfaces which can be exploited across the tenant boundary.

Exfiltration of data using known side-channels requires statistical analysis, the gathering of which requires the victim perform known actions. Viability of statistical analysis is influenced by the quality of the data and the quantity of the data. The more the Attacking App can control the behavior of the Victim App, the easier to improve the quantity/quality of data it can access.

Even when the multi-tenant provider is fully mitigated against speculative execution vulnerabilities covered here, side-channel based tenant-to-tenant attacks may require changes in your application (see Mitigations: Spectre variant 1). Some multi-tenancy providers may require that your application or its container environment opt-in to mitigations (see Mitigations: Spectre variant 2).

Questions to ask yourself:

- Does your application expose a mechanism that can be connected to from outside its environment (for example, a network connection)?

- Does your hosting environment have mitigations applied to prevent cross-tenant attacks (see Mitigations: Spectre Variant 2)?

Mitigation for some speculative execution vulnerabilities may require the hardware has updated microcode, and the Host Kernel / hypervisor appropriately use hardware mitigation when switching between tenants.

Depending on your application, Bounds Check Bypass (variant 1) vulnerabilities may require mitigations to be applied to your application regardless of Branch Target Injection (variant 2) mitigation in the hosting environment.

Bare metal: Client

In a desktop/client environment, applications typically run natively on the system or within a managed runtime (i.e., JavaScript running in a web browser).

Web applications running in a user’s web browser are at a higher risk of exposing secrets if mitigations against both Bounds Check Bypass (variant 1) and Branch Target Injection (variant 2) are not applied to the browser.

Question to ask yourself:

- Do you load or access private data or secrets in a web page or web application (log-ins, cookies, email content read via webmail, etc.)?

- For example: if your web page receives user login and password information, you might need to take steps to protect that password if the user’s browser is not mitigated against both variant 1 and variant 2.

- Does your application warn the user if they are running on a non-mitigated browser?

Your application may be at greater risk from other applications if:

- It provides any mechanism for an attacking application to directly influence its execution.

- Untrusted software is executed using the same physical CPU resources. Even in an interpreted/sandboxed environment, the application may be attacked from that sandboxed software.

Bare Metal: Appliance

A computer appliance is typically a fixed-purpose computer running a vendor-managed operating system image. If you are developing a computer appliance and either of the following are true, you exposure to these attacks is reduced:

- You control all software installed and updated onto the device

- The device does not expose a mechanism for untrusted software to be executed on the device. This includes Just-in-Time (JIT) or sandboxed software, such as software from an app store or web browser.

Extracting data using speculative execution attacks covered here attack requires the attacker can influence execution of the CPU and can use a shared CPU resource as a side-channel to exfiltrate data.

In order to retrieve data through a side-channel created using a shared CPU resource, the attacking software must be running on the CPU. If your appliance does not allow untrusted software to run on the device, there is no opportunity for the attacking software to run on the same CPU. If the secrets on the appliance are important enough, you may still choose to apply speculative execution mitigations.

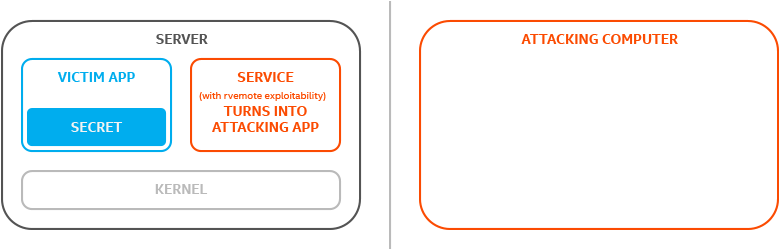

Server with other exploitable service

If a remote exploit exists in another service running on the platform, an attacking computer could use it to install/run malicious software on the target system and circumvent isolation practices:

In this situation, an Attacking Computer attacks a pre-existing remote exploit in an exposed service. The attacker can use that service to inject, install, or execute software that the attacker controls, thereby converting that benign service into an Attacking App. Traditionally, such remote exploits would have their scope of impact limited by running the Service in a restricted environment that adheres to the security principle of least privilege.

Questions to ask yourself:

- What external services are exposed by the environment hosting your application and solution?

- Are you running a version of services not mitigated against known vulnerabilities or exposures?

- Are those services tightly coupled with your application?

- Can you further isolate those services from your application?

Step 3: Analyze the Application’s Exposure

At this point you have:

- Determined your application has secrets to protect and may be using protections which could be vulnerable to speculative execution vulnerabilities covered here.

- Determined your application is running in an environment which might allow an attacking application to share physical CPU resources

The next step is to assess your application’s risk of exposure to side channel-vulnerabilities, specifically those covered here. This includes the attack surface (everywhere an unauthorized user or process can attempt to inject or extract data from the application) and how accessible that attack surface is to an attacker.

Due to the complexity and custom nature of applications, it’s impractical to present analysis for every application. The key question to ask yourself:

- Can untrusted code be executed alongside the target application.

Some things to keep in mind are as follows:

Applications with the least exposure are those where the operating environment does not readily allow untrusted code to execute, such as hardware appliances and other closed systems

Remote services present similar barriers. The attacker must access the remote environment and run code on the target system, which means they must compromise the network security infrastructure for the target environment and then the server operating system to inject code on to the system.

Applications running in cloud environments or on personal systems present the highest potential risk.

In cloud environments other virtual machines may be executing on the same hardware as the target application and the attacker may have legitimate access to those systems.

On personal systems large numbers of applications are often running simultaneously, and the scope and scale of malware suggests they are the easiest systems for attackers to compromise. They typically don’t restrict what applications can run on them, making it easier for arbitrary code to run alongside sensitive applications.

Step 4: Evaluating Mitigation Options

The information presented here is designed to help you assess the risk from specific speculative execution vulnerabilities to application and middleware/library development.

If you have determined you have secrets to protect, the execution environment your application runs in poses a risk, and the exposure merits mitigation, then you need to evaluate mitigation options.

We have developed direction for each of the speculative execution vulnerability variants covered in this discussion. Refer to the following for mitigation guidance:

- Bounds Check Bypass (Variant 1)

- Branch Target Injection (Variant 2)

- Speculative Store Bypass (Variant 4)

References

Intel

- Intel® Software Guard Extensions SDK (Intel® SGX SDK) Developer Guidance

- Mitigation Overview for Potential Side-Channel Cache Exploits in Linux

- Speculative Execution Side-Channel Mitigations

- Intel Analysis of Speculative Execution Side Channels

- Retpoline: a Branch Target Injection Mitigation

Industry

- Jon Master’s talk, given at Stanford and at FOSDEM

- Meltdown and Spectre, explained by Matt Klein, Medium January 14, 2018

Resources

Google Project Zero

RedHat

Misc. Papers

- Cache Attack Template

- Peeping Tom in the Neighborhood: Keystroke Eavesdropping on Multi-User Systems

Software Security Guidance Home | Advisory Guidance | Technical Documentation | Best Practices | Resources